| (四)Kubernetes | 您所在的位置:网站首页 › corefile › (四)Kubernetes |

(四)Kubernetes

|

Kubernetes - 手动部署 [ 3 ]

1 部署work node1.1 创建工作目录并拷贝二进制文件1.2 部署kubelet1.2.1 创建配置文件1.2.2 配置文件1.2.3 生成kubelet初次加入集群引导kubeconfig文件1.2.4 systemd管理kubelet1.2.5 启动并设置开机启动1.2.6 允许kubelet证书申请并加入集群

1.3 部署kube-proxy1.3.1 创建配置文件1.3.2 配置参数文件1.3.3 生成kube-proxy证书文件1.3.4 生成kube-proxy.kubeconfig文件1.3.5 systemd管理kube-proxy1.3.6 启动并设置开机自启

1.4 部署网络组件(Calico)1.5 授权apiserver访问kubelet

2 新增加Work Node2.1 拷贝已部署好的相关文件到新节点2.2 删除kubelet证书和kubeconfig文件2.3 修改主机名2.4 启动并设置开机自启2.5 在Master上同意新的Node kubelet证书申请2.6 查看Node状态

3 部署Dashboard3.1 部署Dashboard3.2 访问dashboard

4 部署CoreDNS

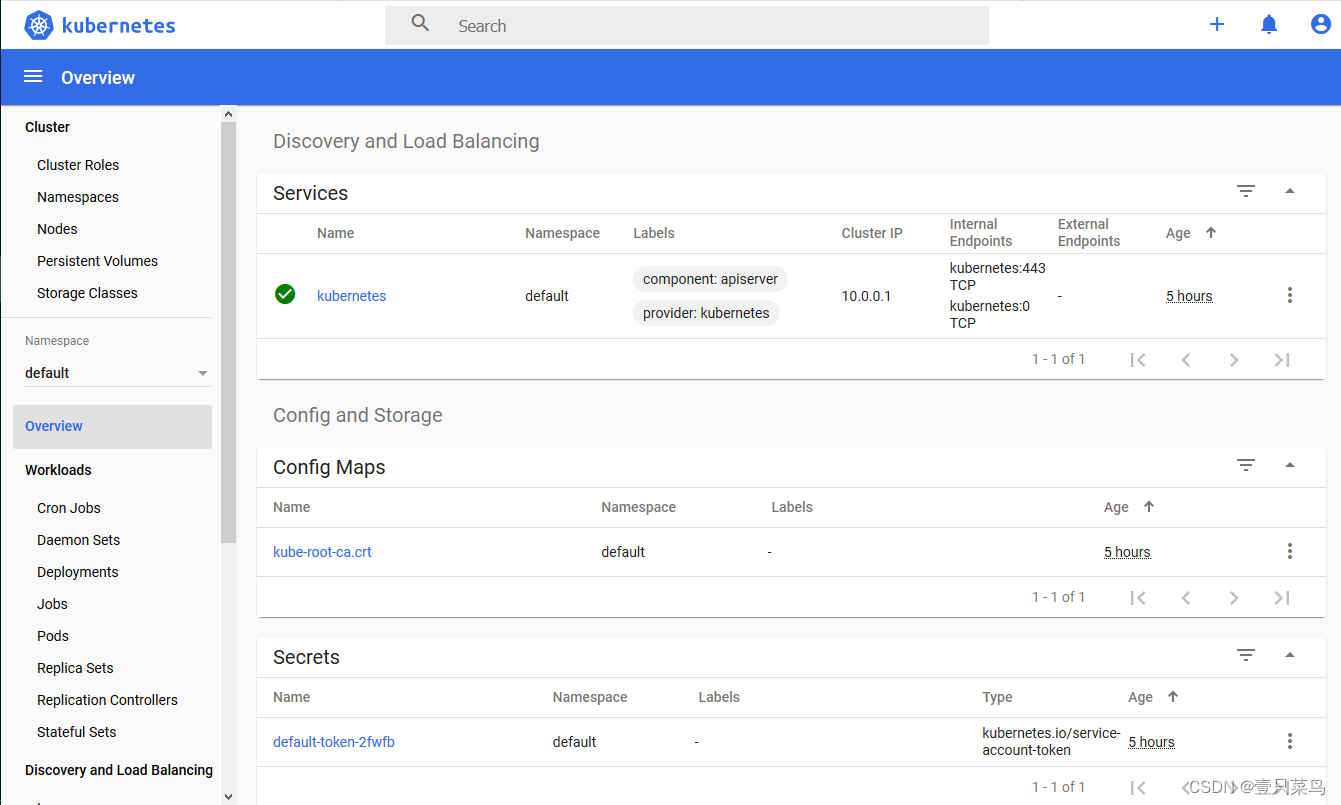

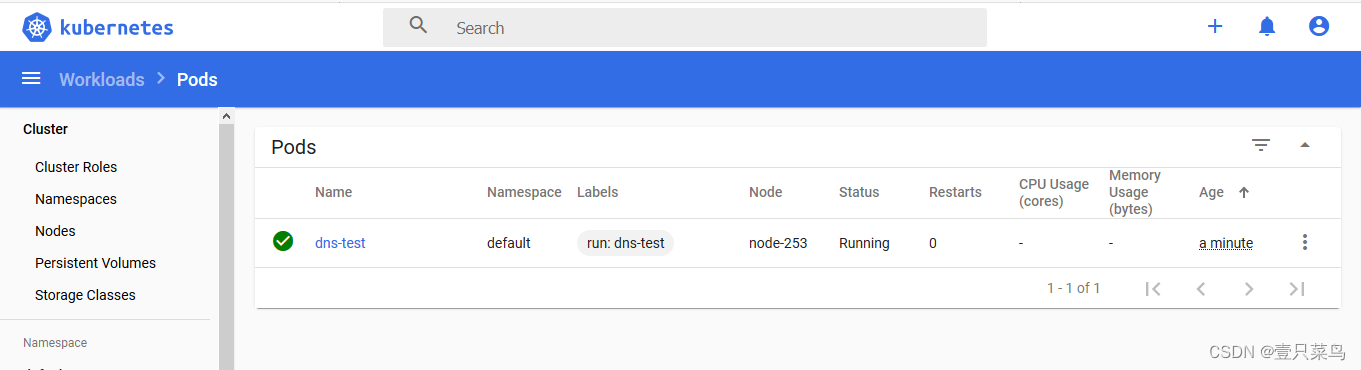

环境准备 主机名操作系统IP 地址所需组件node-251CentOS 7.9192.168.71.251所有组件都安装(合理利用资源)node-252CentOS 7.9192.168.71.252所有组件都安装node-253CentOS 7.9192.168.71.253docker kubelet kube-proxy我们已经在node-251和node-252部署了etcd集群,并在所有机器安装了docker,在node-251(主节点)部署了kube-apiserver,kube-controller-manager和kube-scheduler 接下来我们将在node-252和node-253部署kubelet,kube-proxy等其他组件 1 部署work node我们在主节点配置,完成之后进行同步,主节点也作为工作节点之一 1.1 创建工作目录并拷贝二进制文件注: 在所有work node创建工作目录 从主节点k8s-server软件包中拷贝到所有work节点: cd /opt/kubernetes/server/bin/ for master_ip in {1..3} do echo ">>> node-25${master_ip}" ssh root@node-25${master_ip} "mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs} " scp kubelet kube-proxy root@node-25${master_ip}:/opt/kubernetes/bin/ done 1.2 部署kubelet 1.2.1 创建配置文件 cat > /opt/kubernetes/cfg/kubelet.conf /opt/kubernetes/cfg/kubelet-config.yml /usr/lib/systemd/system/kubelet.service /opt/kubernetes/cfg/kube-proxy-config.yml 2..3}; do scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service [email protected]$i:/usr/lib/systemd/system; done for i in {2..3}; do scp -r /opt/kubernetes/ssl/ca.pem [email protected]$i:/opt/kubernetes/ssl/; done 2.2 删除kubelet证书和kubeconfig文件删除work node的配置文件 for i in {2..3}; do ssh root@node-25$i "rm -f /opt/kubernetes/cfg/kubelet.kubeconfig && rm -f /opt/kubernetes/ssl/kubelet*" done说明: 这几个文件是证书申请审批后自动生成的,每个Node不同,必须删除。 2.3 修改主机名在work node修改配置文件的主机名 [root@node-251 kubernetes]# grep 'node-251' /opt/kubernetes/cfg/kubelet.conf --hostname-override=node-251 \ [root@node-251 kubernetes]# grep 'node-251' /opt/kubernetes/cfg/kube-proxy-config.yml hostnameOverride: node-251 2.4 启动并设置开机自启在work node执行 systemctl daemon-reload systemctl start kubelet kube-proxy systemctl enable kubelet kube-proxy 2.5 在Master上同意新的Node kubelet证书申请 #查看证书请求 [root@node-251 kubernetes]# kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION node-csr-0nA37A70PmadfExLLiUFejGF0vggS-3O-zMHma5AMnc 85m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued node-csr-7T9xXWh8imtC1tfHVpwV6Y6V02UhqIqG5sDRG_PlL34 99s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending node-csr-XHl-UgI7kFXewHESTcwWdnCV1L9AKDgDM2RlE3ErGOE 2m10s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending #批准 [root@node-251 kubernetes]# kubectl certificate approve node-csr-7T9xXWh8imtC1tfHVpwV6Y6V02UhqIqG5sDRG_PlL34 certificatesigningrequest.certificates.k8s.io/node-csr-7T9xXWh8imtC1tfHVpwV6Y6V02UhqIqG5sDRG_PlL34 approved [root@node-251 kubernetes]# kubectl certificate approve node-csr-XHl-UgI7kFXewHESTcwWdnCV1L9AKDgDM2RlE3ErGOE certificatesigningrequest.certificates.k8s.io/node-csr-XHl-UgI7kFXewHESTcwWdnCV1L9AKDgDM2RlE3ErGOE approved #查看 [root@node-251 kubernetes]# kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION node-csr-0nA37A70PmadfExLLiUFejGF0vggS-3O-zMHma5AMnc 85m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued node-csr-7T9xXWh8imtC1tfHVpwV6Y6V02UhqIqG5sDRG_PlL34 2m24s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued node-csr-XHl-UgI7kFXewHESTcwWdnCV1L9AKDgDM2RlE3ErGOE 2m55s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued 2.6 查看Node状态要稍等会才会变成ready,会下载一些初始化镜像 [root@node-251 kubernetes]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-251 Ready 86m v1.20.15 node-252 NotReady 84s v1.20.15 node-253 NotReady 72s v1.20.15 3 部署Dashboard在主节点部署 3.1 部署Dashboard官方教程 https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/ [root@node-251 kubernetes]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta4/aio/deploy/recommended.yaml使用NodePort的方式访问dashboard https://www.cnblogs.com/wucaiyun1/p/11692204.html 修改后的recommended.yaml # Copyright 2017 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort ports: - port: 443 targetPort: 8443 nodePort: 30001 selector: k8s-app: kubernetes-dashboard --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboard type: Opaque data: csrf: "" --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard type: Opaque --- kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster", "dashboard-metrics-scraper"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"] verbs: ["get"] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: # Allow Metrics Scraper to get metrics from the Metrics server - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.0.0-beta4 imagePullPolicy: Always ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --namespace=kubernetes-dashboard # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: dashboard-metrics-scraper --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper spec: containers: - name: dashboard-metrics-scraper image: kubernetesui/metrics-scraper:v1.0.1 ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - mountPath: /tmp name: tmp-volume serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule volumes: - name: tmp-volume emptyDir: {}启动服务器 [root@node-251 kubernetes]# kubectl apply -f recommended.yaml查看pod和svc启动情况 [root@node-251 kubernetes]# kubectl --namespace=kubernetes-dashboard get service kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes-dashboard NodePort 10.0.0.117 443:30001/TCP 27s [root@node-251 kubernetes]# kubectl get pods --namespace=kubernetes-dashboard -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES dashboard-metrics-scraper-7b9b99d599-bgdsq 1/1 Running 0 41s 172.16.29.195 node-252 kubernetes-dashboard-6d4799d74-d86zt 1/1 Running 0 41s 172.16.101.69 node-253 3.2 访问dashboard创建service account并绑定默认cluster-admin管理员集群角色 kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin [root@node-251 kubernetes]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') Name: dashboard-admin-token-nkxqf Namespace: kube-system Labels: Annotations: kubernetes.io/service-account.name: dashboard-admin kubernetes.io/service-account.uid: 7ddf0af3-423d-4bc2-b9b0-0dde859b2e44 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1363 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImJ6R0VRc2tXRURINE5uQmVBMDNhdl9IX3FRRl9HRVh3RFpKWDZMcmhMX2MifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tbmt4cWYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiN2RkZjBhZjMtNDIzZC00YmMyLWI5YjAtMGRkZTg1OWIyZTQ0Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.qNxZy8eARwZRh1wObRwn3i8OeOcnnD8ubrgOu2ZVmwmERJ3sHrNMXG5UDJph_SzaNtEo43o22zigxUdct8QJ9c-p9A5oYghuKBnDY1rR6h34mH4QQUpET2E8scNW3vaYxZmqZi1qpOzC72KL39m_cpbhMdfdyNweUY3vUDHrfIXfvDCS82v2jiCa4sjn5aajwwlZhywOPJXN7d1JGZKgg1tzwcMVkhtIYOP8RB3z-SfA1biAy8Xf7bTCPlaFGGuNlgWhgOxTv8M7r6U_KuFfV7S-cQqtEEp1qeBdI70Bk95euH3UJAx55_OkkjLx2dwFrgZiKFXoTNLSUFIVdsQVpQ访问地址: https://NodeIP:30001,使用输出的token登陆Dashboard(如访问提示https异常,可使用火狐浏览器) CoreDNS主要用于集群内部Service名称解析。 参考 https://blog.csdn.net/weixin_46476452/article/details/127884162 coredns.yaml apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system --- apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance } --- apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS" app.kubernetes.io/name: coredns spec: # replicas: not specified here: # 1. Default is 1. # 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns app.kubernetes.io/name: coredns template: metadata: labels: k8s-app: kube-dns app.kubernetes.io/name: coredns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname containers: - name: coredns image: coredns/coredns:1.9.4 imagePullPolicy: IfNotPresent resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi args: [ "-conf", "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile --- apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" app.kubernetes.io/name: coredns spec: selector: k8s-app: kube-dns app.kubernetes.io/name: coredns clusterIP: 10.0.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP kubectl apply -f coredns.yaml [root@node-251 kubernetes]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-577f77cb5c-xcgn5 1/1 Running 3 157m calico-node-48b86 1/1 Running 0 125m calico-node-7dfjw 1/1 Running 2 157m calico-node-9d66z 1/1 Running 0 125m coredns-6b9bb479b9-gz8zd 1/1 Running 0 3m20s测试解析是否正常 [root@node-251 kubernetes]# kubectl run -it --rm dns-test --image=docker.io/library/busybox:latest sh If you don't see a command prompt, try pressing enter. / # / # / # ls bin dev etc home lib lib64 proc root sys tmp usr var / # pwd / / #

|

【本文地址】

至此一个单Master的k8s节点就已经完成了,后续我们还将部署高可用master。

至此一个单Master的k8s节点就已经完成了,后续我们还将部署高可用master。