| pytorch如何设置batch | 您所在的位置:网站首页 › epoch和batchsize怎么设置 › pytorch如何设置batch |

pytorch如何设置batch

|

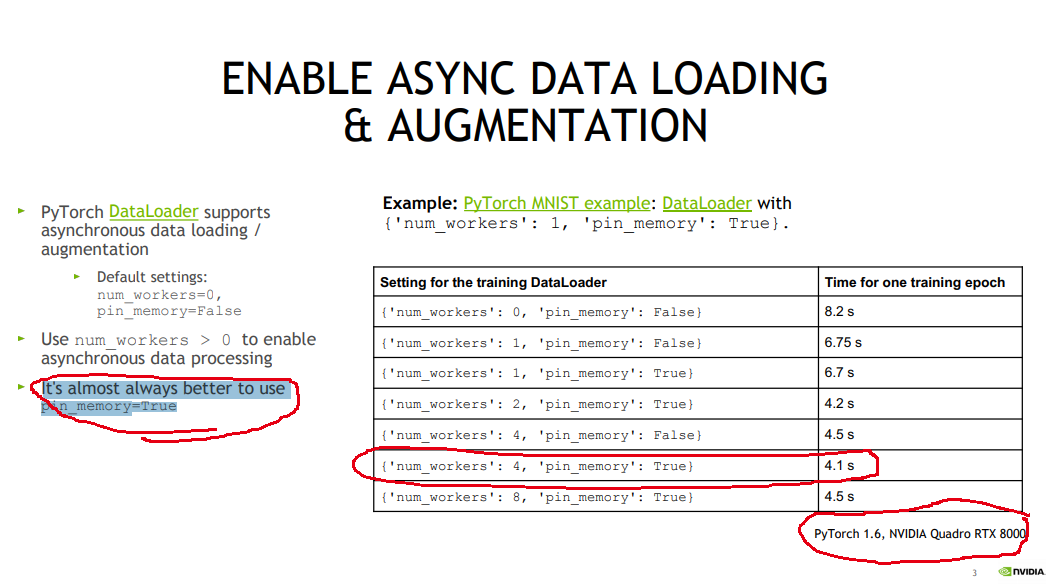

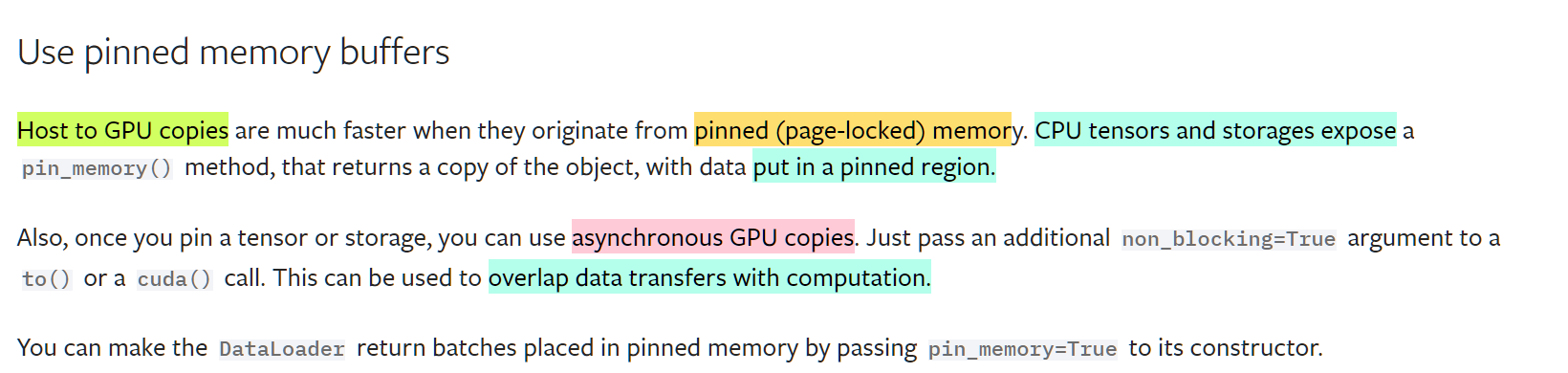

众说纷纭, 不如看文档 torch.utils.data - PyTorch 1.11.0 documentation 自动榨干GPU: https://github.com/BlackHC/toma#torch-memory-adaptive-algorithms-toma1. 懒得纠结, 那就set num_workers =4 x number of available GPUs (训练没用上的空闲显卡不算)这个问题涉及的因素多, 不同情况有不同最优值. 具体分析: 没太懂的示意图...   出处: Data loader takes a lot of time for every nth iteration num_worker大: 下一轮迭代的batch可能在上一轮/上上一轮...迭代时已经加载好了。 坏处是GPU memory开销大 (这是开了pin memory的情况吧) ,也加重了CPU负担。 显存=显卡内存(内存单词是memory),作用是用来存储显卡芯片处理过或者即将提取的渲染数据。 显存和GPU的关系有点类似于内存和CPU的关系 CPU不能直接调用存储在硬盘上的系统、程序和数据,必须首先将硬盘的有关内容存储在内存中,这样才能被CPU读取运行。 内存作为硬盘(外存)和CPU的“中转站”,对电脑运行速度有较大影响。 https://zhuanlan.zhihu.com/p/31558973 显存=显卡内存(内存单词是memory),作用是用来存储显卡芯片处理过或者即将提取的渲染数据。 显存和GPU的关系有点类似于内存和CPU的关系 CPU不能直接调用存储在硬盘上的系统、程序和数据,必须首先将硬盘的有关内容存储在内存中,这样才能被CPU读取运行。 内存作为硬盘(外存)和CPU的“中转站”,对电脑运行速度有较大影响。 https://zhuanlan.zhihu.com/p/31558973CPU的物理个数:grep 'physical id' /proc/cpuinfo | sort | uniq | wc -l 结果为2,说明CPU有两个。 每个CPU的核数:cat /proc/cpuinfo |grep "cores"|uniq 10,说明每个10核。 cpu核数 = 2x10 但如果性能瓶颈在cpu计算(比如NMS等后处理)上,继续增大num_workers加重了CPU负担,反而会降低性能。 Actually for a batch_size=32, num_workers=16 seem to be quite big. Have you tried any lower number of workers? say num_workers=4 or 8. The extra time T (T is about 15s or more when batch_size=32 and num_workers=16) it costs for every Nth iteration is directly proportional to the thread number N. 2. pytorch 1.6以上:自动混合精度|using AMP over regular FP32 training yields roughly 2x – but upto 5.5x – training speed-ups.  increasing num_workers will increase your CPU memory consumption. 3. Tensor Core you can squeeze out some additional performance (~ 20%) from AMP on NVIDIA Tensor Core GPUs if you convert your tensors to theChannels Last memory format. Refer tothis sectionin the NVIDIA docs for an explanation of the speedup and more about NCHW versus NHWC tensor formats. 4. 多卡并行训练时:pytorch:一般有个master gpu, 若所有卡的batch size相同,master的显存满了,其他闲着很多。之前试过手动指定各卡的chunk size,让master gpu的batch size小,这样就能榨干所有卡的显存。但据说这样会导致batch norm时,效果变差。最终训练效果明显变差。 Pytorch并行方式: DataParallel(DP),较为简单,但是多线程训练,且主卡显存占用 比其他卡会多。DistributedDataParallel(DDP)。DDP是多进程,将模型复制到多块卡上计算,数据分配较均衡。 keras: 把模型放在cpu时,没有master gpu 5. 推断时的batchsizeforvalidation_batch_sizeandtest_batch_size, you should pick the largest batch size that your hardware can handle without running out of memory and crashing. Finding this is usually a simple trial and error process. The larger your batch size at inference time, the faster it will be, since more inputs can be processed in parallel. centernet的resdcn18,batchsize64左右时,num woker调到20,每个epoch比设为0快10分钟(原来是17min) 7. pin memory = True 如果开了pin memory: 每个worker都需要缓存一个batch的数据.batch size和num_workers都大, 显存会炸 8. Pytorch的显存管理:除了tensor会占显存,pytorch为了加速,还会占着一部分显存(占着茅坑不拉屎,为了方便随时去la)。【空间换时间】 PyTorch uses a caching memory allocator to speed up memory allocations. This allows fast memory deallocation without device synchronizations. However, the unused memory managed by the allocator will still show as if used in nvidia-smi. You can use memory_allocated() and max_memory_allocated() to monitor memory occupied by tensors, and use memory_reserved() and max_memory_reserved() to monitor the total amount of memory managed by the caching allocator. Calling empty_cache() releases all unused cached memory from PyTorch so that those can be used by other GPU applications. 【非pytorch的任务?】However, the occupied GPU memory by tensors will not be freed, so it can not increase the amount of GPU memory available for PyTorch. For more advanced users, we offer more comprehensive memory benchmarking via memory_stats(). complete snapshot of the memory allocator state :memory_snapshot(), which can help you understand the underlying allocation patterns produced by your code. Use of a caching allocator can interfere with memory checking tools such as cuda-memcheck. To debug memory errors using cuda-memcheck, set PYTORCH_NO_CUDA_MEMORY_CACHING=1 in your environment to disable caching. 扩展阅读:物理cpu数:主板上实际插入的cpu数量,可以数不重复的 physical id 有几个(physical id)cpu核数:单块CPU上面能处理数据的芯片组的数量,如双核、四核等 (cpu cores)查看系统内存(不是显卡内存/显存):在命令行中输入“cat /proc/meminfo | grep MemTotal”to readPyTorch Profiler 2. PyTorch Profiler With TensorBoard |

【本文地址】